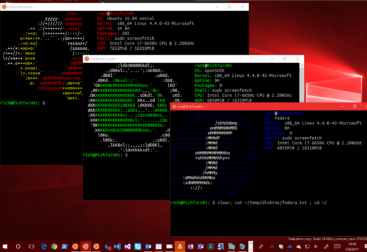

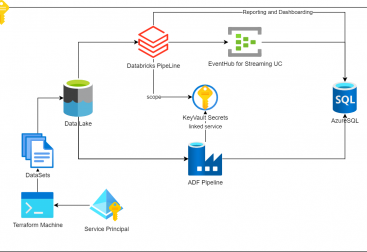

I’ve been working on a Databricks and Delta tutorial for all of you. I published it as notebook and you can grab it here.

We will load some sample data from the NYC taxi dataset available in databricks, load them and store them as table. We will use then python to do some manipulation (Extract month and year from the trip time), which will create two new additional columns to our dataframe and will check how the file is saved in the hive warehouse. We will observe we have some junk data as it created folders for months and years (partitioning), that we are not supposed to have, so we will use filter to apply some filter in python way and in sql way to filter these bad records

Then, we will load another month of data as a temporary view and will compare this in contrast with a delta table where we can run updates and all sort of DML.

As a last step, we will load some master data and will perform a join. For more on Delta Lake you can follow this tutorial –> https://delta.io/tutorials/delta-lake-workshop-primer/

Enjoy coding!